Manycore Grid Architecture

This topic intends to describe the architecture used to allow users to fairly share the manycore Xeon Phi serves.

Architecture Description

Servers Specification

phi02 and phi 03 - SGI model C2108-GP5 (grid nodes):- 2 x Intel Xeon E5-2699v3

- 128 GB DDR4 2133 MHz

- 2 x Hard Disk Drives 2 TB SATA

- 2 x Infiniband FDR (Mellanox)

- 2 x SSD NVMexpress Intel 750 Series (1.2 TB each)

- 1 x PCI Intel TrueScale QLE7342

- 1 x 40Gbps Intel XL710-QDA2

- 2 x Intel Xeon Phi 7210P

- 2 x Intel Xeon Phi 5110P

- Redundant Power Supply

- 2 x Intel(R) Xeon(R) CPU E5-2670

- 64 GB DDR3 1600 MHz

- 2 x Hard Disk Drives 2 TB SATA

- 3 x Intel Xeon Phi 3120

- 2 x Intel I350 Gbe

- 2 x CPU

- 4 GB RAM

- 64 GB HDD

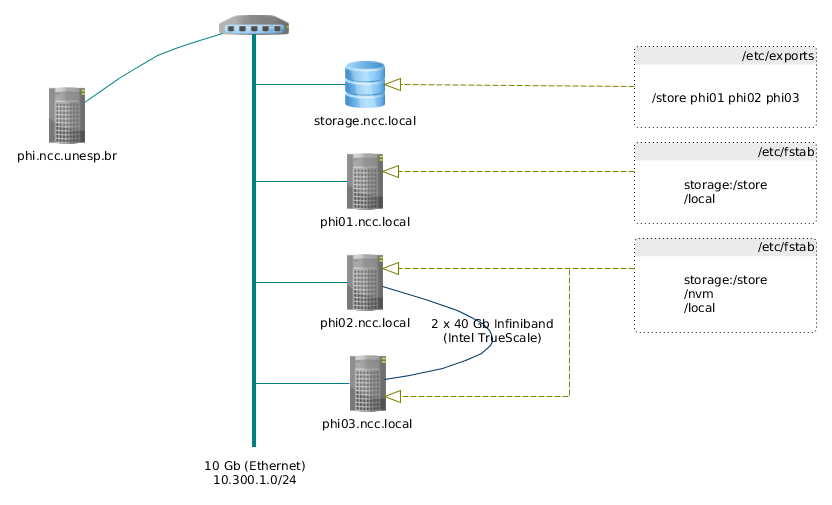

Layout Specification

The grid nodes and servers are connected using a 10 Gb ethernet link. The grid node share a common filesystem mounted using Network Filesystem (NFS). This mountpoint is supposed to store the users files and is available at the /store/$USER path. This storage area is supposed to keep the large and persistent files needed by the users to run their software. The grid nodes phi02 and 03 also make available to the users a highspeed NVMe disk available at the /nvm directory. This highspeed data transfer device can reach peaks of 2200 MB/s for sequential reading and 900 MB/s for sequential writing. This memory should be used only to speed up the users runtime. Therefore, it is their responsibility to copy the output files back to their persistent storage area. --Comments

| I | Attachment | History | Action | Size | Date | Who | Comment |

|---|---|---|---|---|---|---|---|

| |

Arquitetura.png | r1 | manage | 37.0 K | 2016-01-05 - 14:37 | UnknownUser |

Ideas, requests, problems regarding TWiki? Send feedback